[January 28, 2025]

Autonomy for the World: Indoor Exploration with Nova 2

At Shield AI, our mission to develop intelligent, autonomous systems extends across diverse applications, from contested airspaces to intricate indoor environments. The first platform we flew our Hivemind Pilot on is the Nova series of quadcopters—compact, highly capable drones designed for indoor exploration, mapping, and reconnaissance in GNSS-denied environments. Nova quadcopters have been deployed in high-stakes, high-impact missions since 2018, most recently in Israel, where they have been used for critical indoor reconnaissance and hostage rescue operations.

This case study focuses on Nova 2, a next-generation platform that builds on the foundation of its predecessor, Nova 1, to address complex 3D environments. Nova quadcopters play a critical role in Shield AI’s broader mission, providing unmatched autonomy for indoor and confined-space operations. Through advancements in compute power, sensor integration, and navigation algorithms, Nova 2 pushes the boundaries of what’s possible, exemplifying the ingenuity and determination driving all of Shield AI’s projects.

Reimagining Indoor Exploration

After releasing Nova 1, we identified several limitations with both the platform and its software. While impressive for its time, Nova 1 struggled with limited computing power and challenges in handling complex 3D environments. We went back to the drawing board and developed Nova 2, a groundbreaking solution.

Nova 2 features a top-of-the-line embedded compute module, the Xavier AGX, typically reserved for larger platforms, and a sensor overhaul—moving from a single camera with 2D Lidar to an array of 15 cameras. This advanced system enabled remarkable capabilities, including:

- Dense Matching between aligned depth cameras and Feature Tracking on four RGBD cameras for robust State Estimation.

- 3D Object Detection and Tracking for people and portals

- Depth-Infilling for complex scenes: Helped overcome limitations of our depth cameras using context from HDR RGB data.

- Rapid 3D Exploration using next-best view generation to maximize information gain.

- Full Blackout Navigation with infrared illuminators outside the visible spectrum.

- Real-Time 3D Mapping compressed into floor plan slices for operator use.

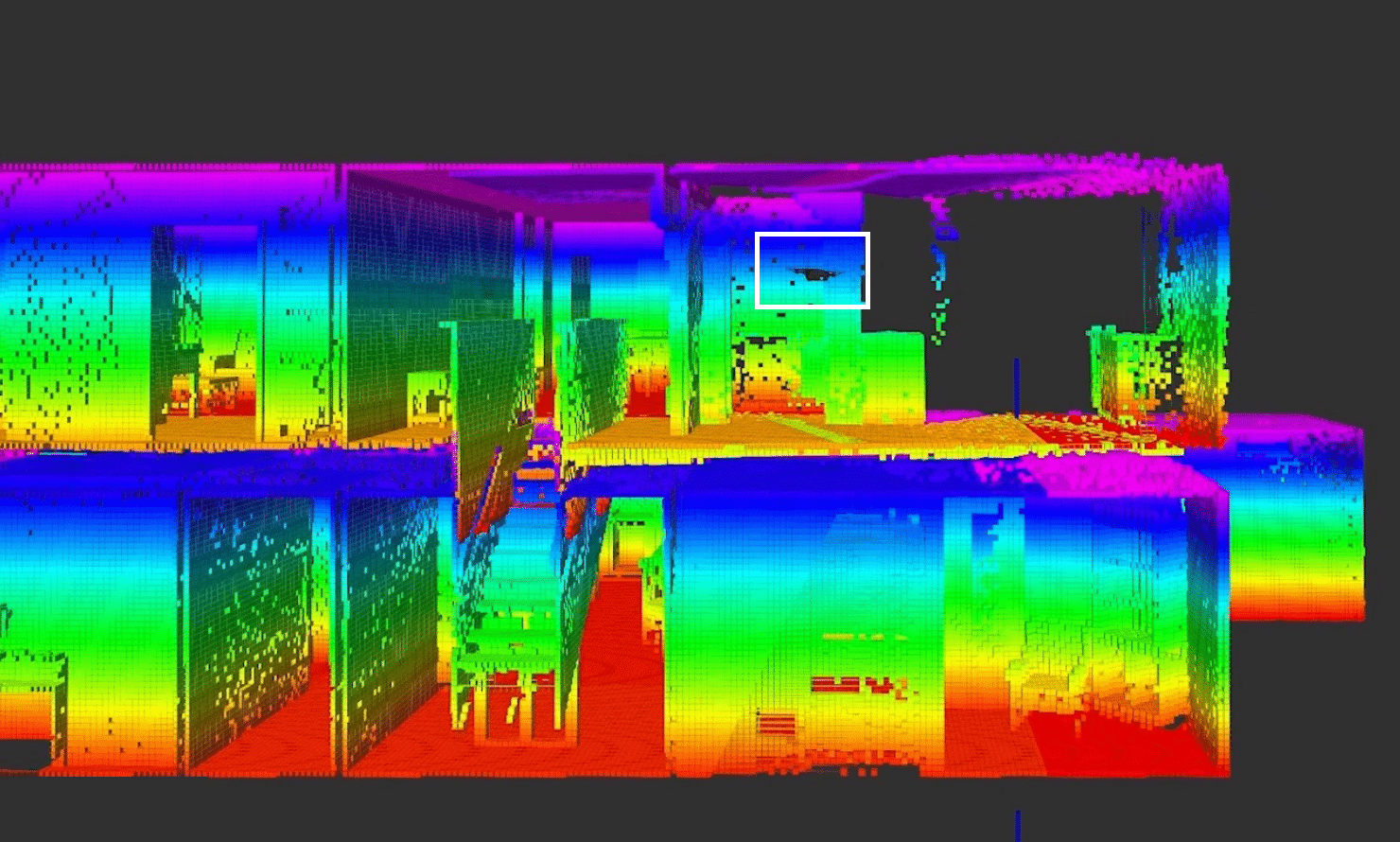

- Dense 3D Mapping at a 5 cm resolution, caching sub-maps to file storage for large-scale environments.

- Loop-Closure Capabilities correcting positional drift by recognizing previously visited locations.

- L1-Adaptive Controller ensuring smooth, robust flight even under disturbances like wind or chipped propellers.

Overcoming Challenges in Design and Development

The development of Nova 2 presented significant challenges across compute power, algorithm design, and hardware integration.

Compute Challenges:

Despite equipping Nova 2 with a computer 10x more capable than its predecessor, we were processing 30-40x more data. Early prototypes revealed gaps:

- Basic state estimation worked with one RGB camera and one depth camera but often failed in environments lacking rich visual information.

- Initial planning stacks operated at only 1-2 cycles per 10 seconds were too slow for real-time missions.

Through months of optimization, including porting CPU work to the Xavier’s GPU and fine-tuning software to run as part of the CUDA compute graphs, we achieved massive speedups: extracting information from all four RGBD cameras and five depth cameras and estimating full state at 10Hz, local planning at 20Hz, and global planning at 2Hz.

Algorithmic Challenges:

Transitioning from 2D to 3D required fundamental changes:

- Perception: Moving from 2D floor plans to rich 3D voxel grids.

- Cognition: Frontier-based exploration in 2D didn’t scale to 3D, requiring a new approach using biased sampling and ranking potential views by expected information gain.

Hardware Challenges:

Integrating numerous novel components into a compact form factor created hurdles, including:

- GNSS noise from USB 3.0 devices. Due to operating in a similar bandwidth, if we didn’t take great care in designing the electrical system, the interference from the USB 3.0 cameras would prevent the robot from understanding any GNSS signals. Rigorous hardware design with proper shielding and continuous de-sense testing ensured that our GNSS receiver had sufficient Signal-to-Noise Ratio to achieve a reliable fix.

- The magnetometer used for compassing is easily affected by dynamic electric currents onboard. While we separated and shielded as much as possible, we noticed that if we didn’t explicitly account for current, we would see greater than 20 degrees of heading error. To solve this, we added a calibration step that turned off separate rails to gather data and a simple regression framework to correct the bias online.

- Disk write slowdowns. One of the most intense bugs we had to solve was related to a CPU hiccup that would pause all critical processes for one second every 80-100 seconds. After extensive profiling and tracing, we eventually found an issue with our chosen disk – specifically that the faster Single-Layer-Cache would fill up quickly and need 1-2 seconds to flush to the larger Triple-Layer-Cache storage.

- Sensor overheating and intermittent failures. As with many compact, heavy-compute systems, we had to manage our thermals very carefully. Even with an efficient thermal design, we would see CPU throttling in some of our extreme desert conditions. In these cases, we scaled down the resources needed by tracking fewer features and generally simplifying the operations. While not ideal, this would allow the system to gracefully handle the heat versus completely fail.

The Right Team and the Right Tech

Nova 2’s success can be attributed to several key factors, starting with a stellar team that tackled hundreds of challenges with skill and determination. Other than being brilliant individuals, what really allowed us to push out Nova 2 was extreme grit. While trying to get the multi-camera feature tracker working on the early prototypes of Nova 2, I remember being in the lab almost 30 hours straight tuning the parameters for tracking and adapting the timing between camera and IMU.

Our choice of technology also played a critical role; by betting on the Nvidia compute system and rich 3D camera information, we unlocked significant capabilities that propelled the platform forward. While issues like limited observability in long tunnels and reflections from glass remained, we adapted planning algorithms to overcome these challenges effectively.

Swift updates were made possible through a combination of short feedback loops and robust testing frameworks, allowing us to iterate rapidly. Extensive simulation tests were used to validate and verify algorithm performance, complemented by hardware-in-the-loop testing to ensure the system performed seamlessly on the device itself. Nearly daily flight tests, conducted in the lab and across various real-world conditions, provided invaluable insights and enabled continual refinement.

In the end, Nova 2’s development showcased a combination of the right team, rigorous testing, and strategic technological choices, demonstrating what is possible when innovation and execution align. The development of Nova 2 is instrumental to Shield AI’s aim of deploying Hivemind across a wide range of platforms. By mastering state estimation and navigation in GNSS- and comms-denied environments, we’ve validated these systems in some of the toughest scenarios, including indoor operations. Lessons from Nova quadcopters have directly informed the scaling of Hivemind to larger platforms such as V-BAT, MQM-178 Firejet, and F-16, demonstrating the flexibility and scalability of our autonomy solutions.

About the Author

Vibhav is a Senior Engineering Manager at Shield AI, where he leads the Hivemind Systems Engineering Group, overseeing the Software/Autonomy Architecture, Integration, and Testing of the Hivemind SDK. His role places him at the critical intersection of product development and engineering teams. Previously, he served as the technical lead over various aspects of the AI pilot, including Guidance, Navigation, and Control (GNC). Vibhav holds a master’s degree in robotics and a bachelor’s degree in computer science from Carnegie Mellon University. He lives in San Deigo and enjoys cooking, playing guitar, and trying new things like hip-hop dance and volleyball.

This blog is part of a series of case studies highlighting the unique challenges and accomplishments of integrating Hivemind on each platform it has flown. Each installment delves into the technical innovations, collaborative efforts, and mission successes that define our work and our teams.