[October 12, 2020]

The Challenges of Robotic Perception

What are the primary challenges of robotic perception?

The perception challenges we experience as humans are the same types of challenges that robots face when trying to perceive the world. There are many examples of this. To begin with, just as people have trouble seeing in the dark, robots may encounter situations in which their sensors do not perform optimally. For a robotic system, this type of sensory degradation might occur in any situation when a sensor is being used outside of its operating thresholds. Regardless of the cause, this leads to a lack of necessary information required to properly perceive.

Repetitive environments also present challenges, as confusion may arise due to a lack of identifying characteristics in the environment that make it difficult for the robot to reliably determine where it is. If the environment presents a maze-like quality or looks similar to places the robot has travelled to before, this is what we call perceptual aliasing. Similarly, a robot may face perceptual ambiguity when it does not perceive adequate information from its sensors to discern whether or how it is moving relative to another object. From the theory perspective, there’s a very precise statement about this notion — called observability — the ability of the system to extract its state in a well-posed manner given its sensor observations.

Are there additional challenges to multi-robot perception that do not appear in the context of single robot systems?

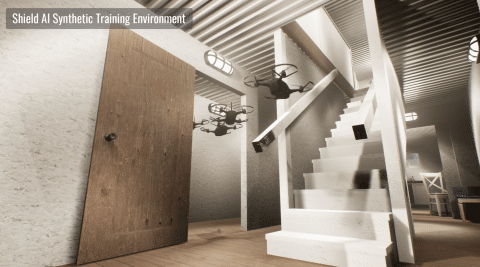

Of course, the challenges that arise within the context of robot perception for a single system also arise for multi-robot systems. What becomes interesting when we transition to multi-robot systems are the challenges we face with respect to coordination. Consider the notion of perceptual aliasing with multi-robot systems. If an individual robot has difficulty telling itself where it is in an environment, it’s going to be additionally challenging for it to communicate that information to other robots.

Can you elaborate on how coordination complicates perception for multi-robot systems?

All kinds of challenges arise when you’re talking about multiple robots working together, not just because perception is hard, but because in multi-robot systems, there are fundamental challenges in terms of how to establish consistent, relative transformations that render the different robots’ observations of the world into one similar or common framework. In other words, how do you get multiple robots to produce a unified picture?

For instance, multi-robot perception requires the robots within a system to recognize when they have travelled through similar areas of the environment. As a robot operates and develops a map of its environment there will often be some drift that will arise as a consequence of imperfect sensors. We call this dead reckoning drift. Two robots travelling through similar parts of the environment will experience different dead reckoning drift, making it difficult for them to reason about each others’ model of the environment in a consistent manner.

Establishing the fact that two robots have seen consistent parts of the world can become further complicated by time. Perhaps two robots exploring a building meet up, having actually criss-crossed paths previously at different times. In this situation, the robots must communicate, looking backwards through their histories, to figure out the relative transformations between their paths through these same environments. This ranks among the bigger challenges in coordinated perception and is one we successfully take on each day at Shield AI