[August 11, 2020]

Considering Communication in Coordinated Perception

Among multi-robot systems, what is the role of communication in perception?

Communication is a consequential consideration within coordinated perception. Fundamentally, when we talk about coordinated perception, we are speaking about multiple robots moving through an environment engaging in perception. Consider the example of a 2-robot system with both robots moving through a building. Perhaps earlier in the day, the robots had been in the same location, but not necessarily at the same time. In order to engage in coordinated perception, the robots must share this information — communicate — with one another, and they must do so in a manner that is succinct enough that they can pass the information given finite bandwidth and resources, while maintaining sufficient fidelity to be able to use that information to develop a consistent, unified model of the world.

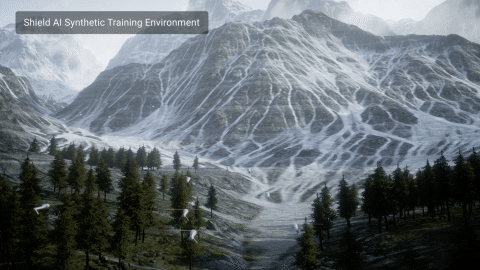

Coordinated perception pushes up against challenges, not just in mapping and state estimation across potentially large numbers of robots, but also in doing so while constrained in terms of communication, connectivity, processing and sensing.

How does communication evolve when you begin to consider the human-in-the-loop and, specifically, how to communicate what the robot has perceived to its human operator?

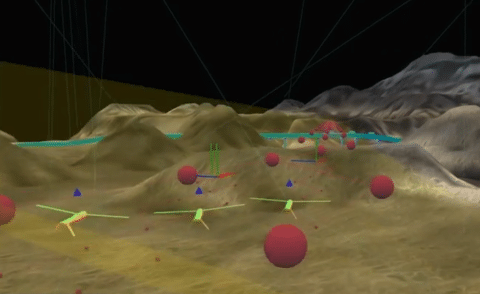

Coordinated perception requires that each robot within a multi-robot system compute robust transforms that bring their models out of the local environment that they’re developing individually into a consistent reference frame, and then distribute that information to the group. In other words, out of many pictures, one must emerge. So, each robot must complete a lot of work locally in order to yield a consistent model that fuses the information from different sources — different sensors on different robots — together into a consistent reference frame. And the reason that’s possible is because of trust, which is something we think a great deal about at Shield AI. In order to be able to leverage the data coming from other robots, each individual robot must locally build out its own consistent, trustworthy model.

When you consider systems, like Nova, which are built to operate autonomously, you have a situation in which robots are making their own decisions while human operators are guiding the system from a supervisor perspective. When an operator is working with larger numbers of robots, the operator is seeing the same kind of locally developed joint maps being created right there in front of them through a user interface. That interface will be optimized to focus on those areas that really matter to the operator in order to allow for interaction and engagement. At Shield AI, this is done by the User Experience (EX) design team.

When an operator then interacts with the map on the user interface — let’s say he or she makes a selection of some point that is a location they want the system to travel to — they are interacting with a joint map that has been locally developed to be consistent across robots within the system relative to some consistent coordinate frame. This interaction is then transferred, shared and distributed to the remainder of the systems and the local models they have built. Those models must be appropriately transformed in order to allow for a selection of a point by the operator to be recognized as the same selection of a point by all of the other robots.

This is actually quite challenging to accomplish as not all robots will have access to all information, or even the same information at the same time. Some robots will be sharing information much more rapidly to each other, while other robots will have to transfer information across different links as the network topology changes as a consequence of the robots moving through the environment itself.

Can you elaborate more on that challenge?

It is interesting to consider this sharing of information because in order to make it manageable and tractable, you have to start thinking about which information is relevant and which is not. You need to extract the essence of the information and avoid redundancy so that you are only sharing what you need to share and nothing extra. From there, you also must consider how to manage this in a context in which you cannot guarantee message delivery or time synchronization.

It becomes more interesting as the environment complexity changes because the messaging must change to keep up with the additional complexity. The amount of data that’s being distributed changes, which then changes the rate at which the models and maps can be built across the distributed system, which ultimately changes the rate of which the overall system can deploy and coordinate. What you see is that as the complexity increases, the robots will actually begin to slow down. And as the environment becomes more succinct and the robots require less information to accurately model it, you see them start to speed up. You witness the robots naturally adapting to the constraints that the physical system places on the computational system and the electrical communication system