[January 4, 2023]

Delivering Hivemind: A software ecosystem to enable autonomy on the edge

Alex Burtness is a graduate of the U.S. Naval Academy and Johns Hopkins University, where he studied systems engineering and mechanical engineering, respectively. He served in the Navy as an Explosive Ordinance Disposal (EOD) officer and worked at Brain Corp as a technical program manager before joining the Shield AI team in 2021.

When people ask me why we need AI and autonomy for aircraft, my answer is simple: to tackle problems that humans cannot. At Shield AI, we build edge software that delivers intelligent autonomy and human-machine teaming capabilities for the most challenging missions in data-denied, GPS-denied environments.

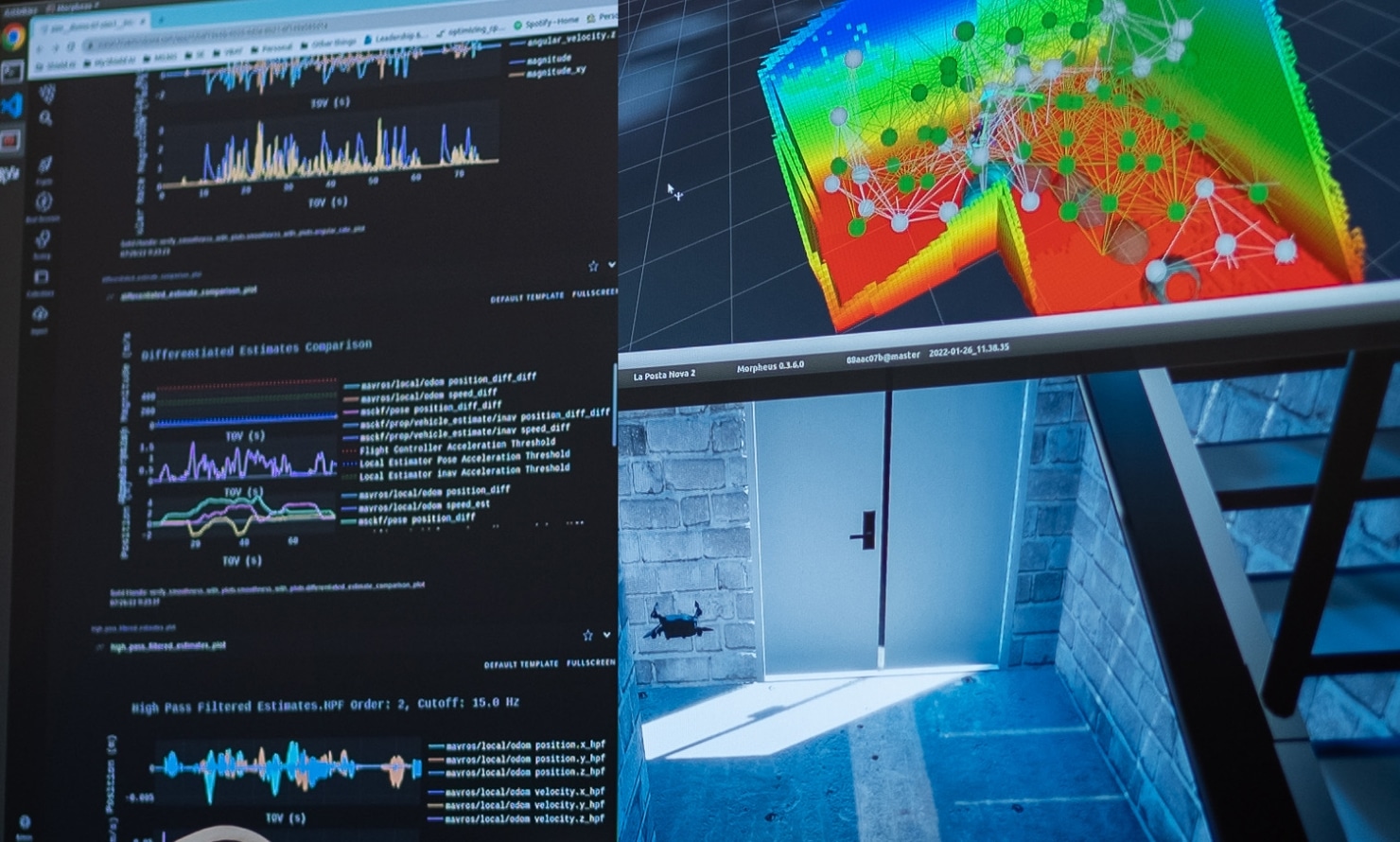

We build autonomous systems to achieve extremely high levels of performance in terms of consistency, reliability, and robustness. To meet these requirements, we have constructed a software ecosystem called Hivemind that not only encompasses the edge-level autonomy software but also the tools around it to enable rapid development and fielding. Hivemind bolsters confidence in the autonomy capabilities by leveraging high-fidelity simulation and historic flight data to test algorithmic performance across complex analyzers.

At its core, Hivemind has three components

- The software modules running on the edge that perform the core functions of perception, cognition, and action.

- The human-machine interface. While our platforms can work independent of human input, they still require humans to provide intent and they always allow humans to re-task the systems.

- The configuration and testing tools required to launch and analyze the platform at various levels of abstraction.

The complexity of running software at the edge comes from both the time-critical nature of the decisions and the size, weight, power and cost (SWaP-C) constrained nature of the platforms. We use well-tested and hardened software modules using artificial intelligence in key areas to produce a resilient autonomous system. Starting with the perception stack, we use state-of-the-art optimization frameworks to handle a plethora of sensors to estimate the robot state and surroundings in a variety of challenging environmental conditions such as GPS-denial. The cognition stack uses the world model — made up of the state estimates and map representations from the perception stack — and planning components that reason about the intended objectives and safety to determine the next action. Once decided, the agent adapts what it is doing to achieve the goal. All this happens hundreds of times per second, all without constant human control.

Our work with the DoD allows us access to some of the world’s most skilled pilots. We wish to leverage this experience in developing our aircraft autonomy behaviors to develop interfaces for humans to interact with our AI. They can fly against it and fly with it as a teammate, observing how it reacts to various operational environments. This is useful in two ways. First, it gives the human operator trust in the AI agent because they can see it perform in a variety of situations and react the same in the real world. Second, we can learn from every single human-agent interaction and use the information to feed our algorithms to make them better.

This work with human pilots also reminds us of the importance of intuitive operator interfaces during missions. The most advanced autonomy platforms will not be useful if human commanders are unable to easily relay intent and goals. While reducing cognitive load with minimalistic design, we utilize expert knowledge from our close customer relationships to understand the key insights to show at what time.

Looking longer term, the powerful part of Hivemind is not just the autonomy itself, but also the way each system integrates with other autonomous systems, and the test and validation capabilities it offers. Hivemind lets us take systems from simulation into the real world, where they must deal with all the accompanying complexities. The total solution space we’re working with involves a massive number of parameters and it would be impossible for a human engineer to do this without an ecosystem like Hivemind. We can do in just days what it would take a human many years to do. Hivemind offers engineers an opportunity to work on complex systems, and it supercharges our engineering capabilities. At Shield AI, a single engineer can make refinements to an algorithm, reliably get data about how the algorithm would perform on a real-world aircraft, and then see that algorithm in flight the next time the aircraft flies. This is rare across our industry where it traditionally takes a wildly long amount of time to see an algorithm fly after writing it.

Designed to rapidly field autonomy capabilities, Hivemind meets the level of robustness the real world requires. Our development cycle looks like this:

- Determine a need for a new capability or a fix to a current one

- Convert the goals into clear acceptance criteria (with analyzers to confirm performance)

- Develop the software solution

- Iterate in simulation with users until the analyzers confirm success

- Iterate in the lab with prototype vehicles (using the same analyzers)

- Test in the real-world with users (using the same analyzers)

For me, the two most fundamentally hard and interesting problems are applying engineering to real-world applications and scaling it. Anyone can develop an algorithm and make it work in a one-off demonstration; but, to take those same algorithms and see them perform reliably in the most complex and adversarial environments is a fundamentally different challenge. Scaling at Shield AI is best represented by the fact that the same algorithm works on hundreds of uncrewed aircraft, deployed to a myriad of environments to accomplish diverse mission sets. We do this by intelligently designing at the right abstraction layers and introducing rigorous testing at the earliest levels. The intellectual challenge that accompanies scaling is massive and cutting corners won’t work; all the things you can do as an engineer in a lab to tweak things to “make it work once” are futile. At Shield AI, we are taking on the hardest engineering challenges, and doing so with grit and rigor, because our work directly contributes to a mission with significant global impact.