[August 7, 2023]

Generative AI Builds Autonomy for Defense

How Generative AI Will Enable Warfighters, Not Engineers, to Create Autonomy and Asset Mission Packages Imminently

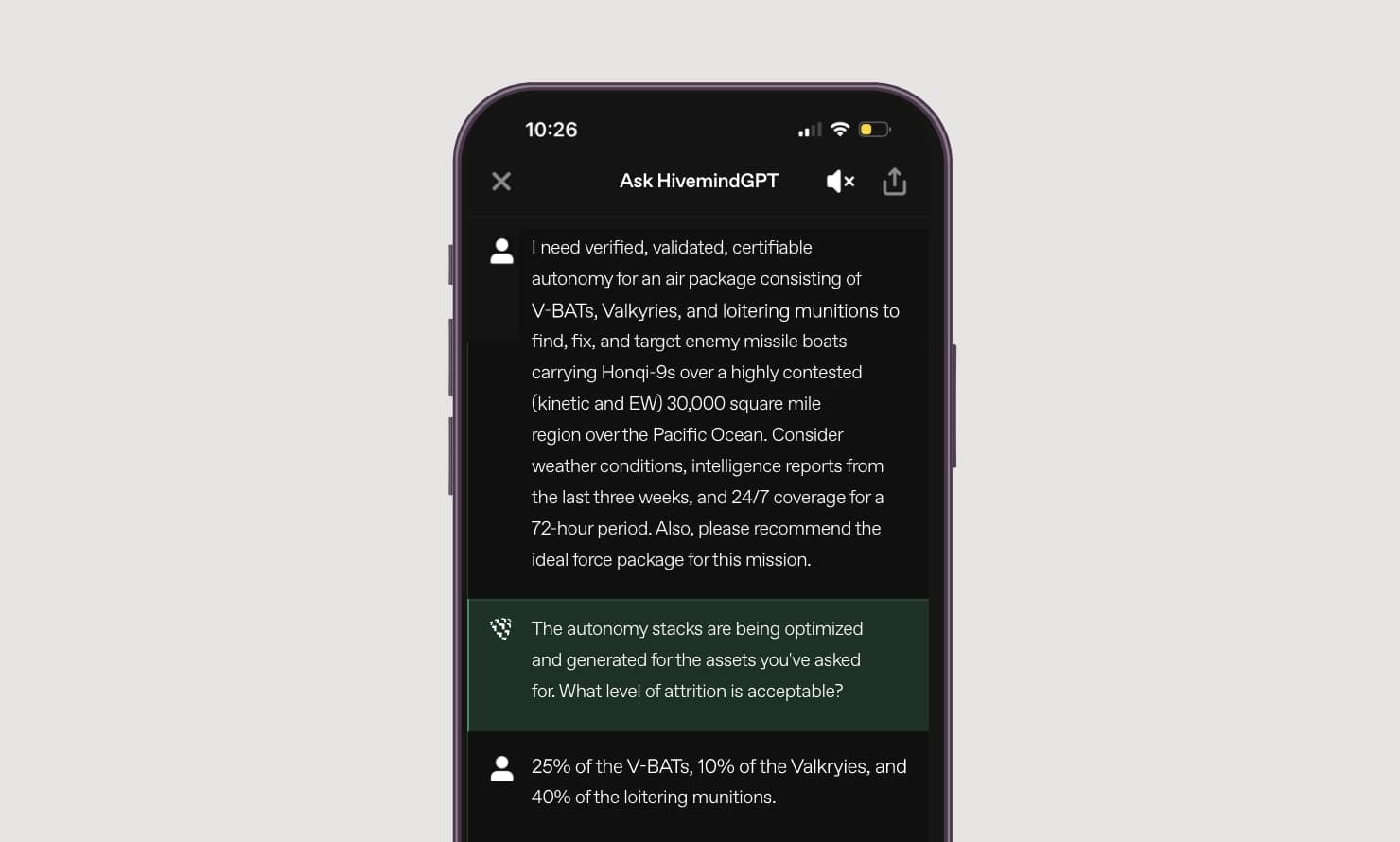

Scene Setter – Somewhere in the Pacific, circa 2028

The above scenario represents an achievable aimpoint for Shield AI that we have been working towards since the first papers on large language models were published. After reading those papers in 2017 and 2018, we decided that generative AI applied to a sophisticated, well-defined autonomy architecture would play an important role in the development of autonomy itself. We decided we would integrate generative AI techniques into our autonomy product roadmap, just as we had integrated reinforcement learning techniques into our product roadmap. The initial results are promising; the end products will change everything.

Paradigm Shifts in Autonomy as a Result of Generative AI

The robot autonomy domain is benefiting significantly from generative AI techniques. These enhancements apply to how operators can plan for an autonomous robotic mission or undertaking, as well as how they command squadrons or fleets of intelligent robots. Shield AI’s vision is a paradigm shift away from an ‘AI-engineer-in-the-loop’ model, where the engineer is integral to the design, deployment, evaluation, and iteration of autonomy software for a particular use case. The shift is towards an ‘operator-on-the-loop’ model, where the operator’s intent alone is sufficient for generating, testing, evaluating, and deploying working software for autonomy.

To achieve this vision, Shield AI has been developing novel technologies for interaction with our autonomous robots that leverage natural language as input. These innovations offer the potential to reduce complexity and friction in a range of user interactions across mission planning and command. Natural language enables an operator to describe their intent—what the operator wishes to achieve—within a particular concept of operations (CONOPS)—the environment and the context of the mission, as well as a tactical description of the mission objective and how it is achieved. Here, the role of generative models is to transform unstructured input from an operator into a structured form that can be processed and realized as self-parametrized autonomy stacks, coupled with a computable scenario description in which this stack is deployed, tested, and evaluated.

The ultimate worth and potential of these methodologies within robotic autonomy are primarily aimed at their most valued qualities: reliability and trustworthiness.

Building Trustworthy AI: Leveraging Generative AI for Robotic Autonomy

Shield AI develops technological products in which we—as operators or society at large—must place our trust, as our lives may depend on them. As such, it is critical that these technologies be verified and certified with the utmost diligence and rigor. In order to validate such systems, we must have the deepest and most rigorous understanding of their inner workings, their ways of managing edge-cases, and how they deal with failures. Shield AI’s vision for generative AI within robotic autonomy is therefore built on this foundational question: how can AI writing AI software lead to products that are more trustworthy, reliable, and explainable to their users?

In order to achieve this objective, Shield AI is developing its generative AI atop a models-based autonomy architecture that leverages modularity, testability, verifiability, and explainability at its core. This architecture is built on the concept of blocks, the foundational components of autonomy capabilities. These blocks are enriched with extensive metadata describing their objectives, inputs, outputs, and parametrization, and this metadata is provided to generative models as context. We then leverage generative AI to select and connect blocks to achieve desired outcomes.

In our approach, generative AI goes further to combine reduced user interaction friction, with the assurances of safety (nothing bad happens), liveness (something good eventually happens), invariance (things that should happen always happen, and those that should not, do not), and deadlock freedom (the system always makes progress) that is provided by formal verification methods applied to autonomy architectures.

Human-Driven Strategies, Tactical AI Execution

To apply this to a specific example: consider the CONOP described at the beginning. Instead of the operator performing complex mission planning for each individual robot while rationalizing and strategizing about the coordination among these robots, the operator is provided with a ready-to-fly system tailored for this mission, that is tested and validated across thousands of simulated missions.

Building on this, our generative AI approaches go even further to discover and describe the adversarial environments in which our autonomy can be tested to expose its weaknesses, which in turn feeds back into our training methodologies to address those weaknesses. The result is an autonomy stack that is rapidly developed, verified, quality-assured, and deployed onto diverse target platforms for a variety of CONOPS with explainability at the level of every line of code, coupled with user interactions with autonomous systems that fluidly reduce friction in achieving mission objectives.

Optimal Human and Machine Teams

Much like the intricate symphony of an orchestra where every instrument contributes to the harmonious output, generative AI is orchestrating a revolution in human experiences and interactions across various domains, defense and national security applications included, enhancing the quality of these experiences and driving innovation at an unprecedented scale. At Shield AI, we are working with this powerful technology as it relates to the development of autonomous products that deter conflict – where a simple typed description from a warfighter can generate the autonomy stacks for hundreds, if not thousands of aircraft, surface vessels, and underwater vessels, to execute the most strategic and complex combined-arms maneuvers and missions. We believe achieving this will yield the greatest victory: that which requires no war.

Ali Momeni is a VP of Engineering at Shield AI, where he is at the forefront of an autonomous revolution. He is building a future where current remotely piloted drones are replaced with heterogenous teams of autonomous aircraft tasked by simple human commands, delivering unprecedented air superiority and deterrence for US forces in future conflicts. Prior to Shield AI, he was a faculty member at Carnegie Mellon University, where he spearheaded research and education at the intersection of Design and Technology.

Want To Learn More?

Get in touch with the Shield AI team today.