[January 29, 2019]

On the Use of Simulation in Autonomy

A conversation with Ali Momeni, Senior Principal Scientist and Director of User Services & Experience at Shield AI. This is a continuation of our conversation On Human Computer Interaction.

What is simulation being used for at Shield AI?

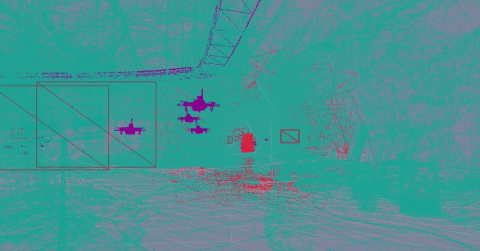

Shield AI, like many in autonomous robotics, is deeply invested in simulation and emulation. Consider simulation as being something that’s totally virtualized and abstracted, and emulation as something that is real but placed out of its native context. If you take a real thing and put it into a virtual environment (e.g. the Star Trek Holodeck), that’s a form of emulation. Whereas, if you model and mimic the qualities and behaviors of a real thing, and you place that copy into the virtual world, that’s a form of simulation (e.g. Epic’s Fortnight). Visualization of data plays an important role in emulation and simulation, both to enable the user to understand the evolution of the virtual world as well as to facilitate realistic modeling of specific system capabilities (such as cameras).

What real world impact does simulated testing have?

There are many positive implications. A tangible one concerns the actual physical form of the robot. We were able to figure out through simulation that if we adjusted the placement of one of Nova’s cameras, then the propellers wouldn’t be in the way of the camera image. Obviously, it proved advantageous to make this observation before we manufacture the physical robot.

What are you most excited about with regards to simulation at Shield AI?

Building trust. It will be a really exciting moment when I can see on the faces of our test pilots that they really trust the simulation, and seeing something in simulation gives them confidence that it will occur similarly in the real world. The test pilots have a real feel for the whole experience. So when they trust the simulation, then we’ve done something right.