[January 19, 2024]

Revolutionizing V-BAT Operations: Navigating Autonomy in Single and Team Missions

With Shobhit Srivastava

In an age where technology is redefining our skies, how do we ensure that our autonomous V-BATs not only fly solo but also work seamlessly in teams? In this conversation, Shobhit discusses the intricacies behind the technology that empower these intelligent aircraft to operate autonomously, whether alone or in collaboration.

https://shieldai.canto.com/direct/video/vlgcdogpul71b0f63fo4kujr0v/ZZH1Z6B1pFAGbV_l0oq8hYUvMTs/original?content-type=video%2Fx-m4v&name=Detterence+Requirements.mp4

Q: Let’s start with the basics! Talk to us about autonomy for aircraft.

A: First off, I will say that military aircraft and their operations are a prime candidate for autonomy. In the past century, the U.S. military has analyzed how decisions are made to create a systematic way to improve decision velocity, leading to the development of the OODA loop: observe, orient, decide, and act. Pilots must cycle through this loop quickly, continuously, and efficiently to maneuver aircraft, a task that becomes exponentially more difficult with the addition of each new aircraft.

The goal of autonomy with respect to flight operations is not to enable each aircraft to cycle through the OODA loop without guidance; rather, it is to optimize execution and return the strategic and tactical components of the decision-making process to the pilot. This results in faster reaction times and ensures the safety of both humans and machines involved in the process.

This becomes an irreplaceable function in communications-denied environments where pilots and satellites cannot communicate with V-BAT agents. One example of an environment where communications jamming is prevalent is the ongoing Russia-Ukraine conflict, where drones have already proven crucial in turning the tide without increasing human toll.

Q: What are the components of the autonomy stack in a V-BAT, and how do they work together to enable operation without human intervention?

A: The autonomy stack consists of the sensing, state estimation, mapping, planning, and controls systems. Functionally, each of these has subcomponents that work together to achieve synergistic results, which are fed into the other systems either sequentially or simultaneously.

Sensors such as cameras and inertial measurement units on the V-BAT pass their readings to the state estimation stack (which depicts the agent’s position, orientation, velocity, and other important state details as accurately as possible) and to the mapping system. The mapping system incorporates observations into a global depiction of the world, including the V-BAT’s surroundings, other aircraft, no-fly zones, and the locations and velocities of vessels at sea if applicable. The role of the planning system is to use the estimated state of the V-BAT, the world model, and the desired goals of the mission to determine the next set of actions.

To accomplish this, the planning stack, which is comprised of the executive (task-level) and behavior (objective-level) managers, converts the intent of the user to the tasks and behaviors needed to accomplish the intent. The controls block provides the specific thrust commands for the engine and deflection amounts for the control vanes and ailerons to successfully accomplish the tasks that were planned.

Q: How do you overcome the challenges associated with operating V-BATs out in the field without comms/GPS?

A: There’s no question that state estimation without GPS or GNSS is a challenge, but we learned how to make our Nova quadcopters work in the most challenging cases – indoor without comms and GPS – and we are taking all that to V-BAT.

Using a combination of visual and inertial sensors, we deploy Nova 2 into nearly any environment irrespective of GPS and regularly work indoor where there isn’t GPS. We extend this sliding window optimization-based estimation system to the V-BAT by incorporating additional sensor modalities.

There are two aspects of solving the localization problem without GPS. The simpler aspect is understanding your motion in a relative frame. This is possible in cases where V-BAT cannot observe a global reference frame but is able track objects to provide it relative motion. In cases where we started with location in a global frame, we can use the relative motion to extrapolate our position accurately. To solve the relative estimation problem, we use visual features (e.g. slow-moving boats) or signals of opportunity (e.g. stray RF emitters) to triangulate new landmarks and track them over time.

The harder aspect is then figuring out position in a global frame. While GPS provides this using external data received, V-BAT must do so using only what is on-board when it is in a denied environment. The general framework uses internally saved maps of various formats and sensors that provide estimates of potential positions in that map. For example, we can save a detailed magnetic map of the world and use a carefully calibrated magnetometer to look for subtle anomalies that indicate unique positions on the map. Similarly, we currently employ a vision-based system that looks for key landmarks and correlates with satellite imagery to determine location over land without GPS.

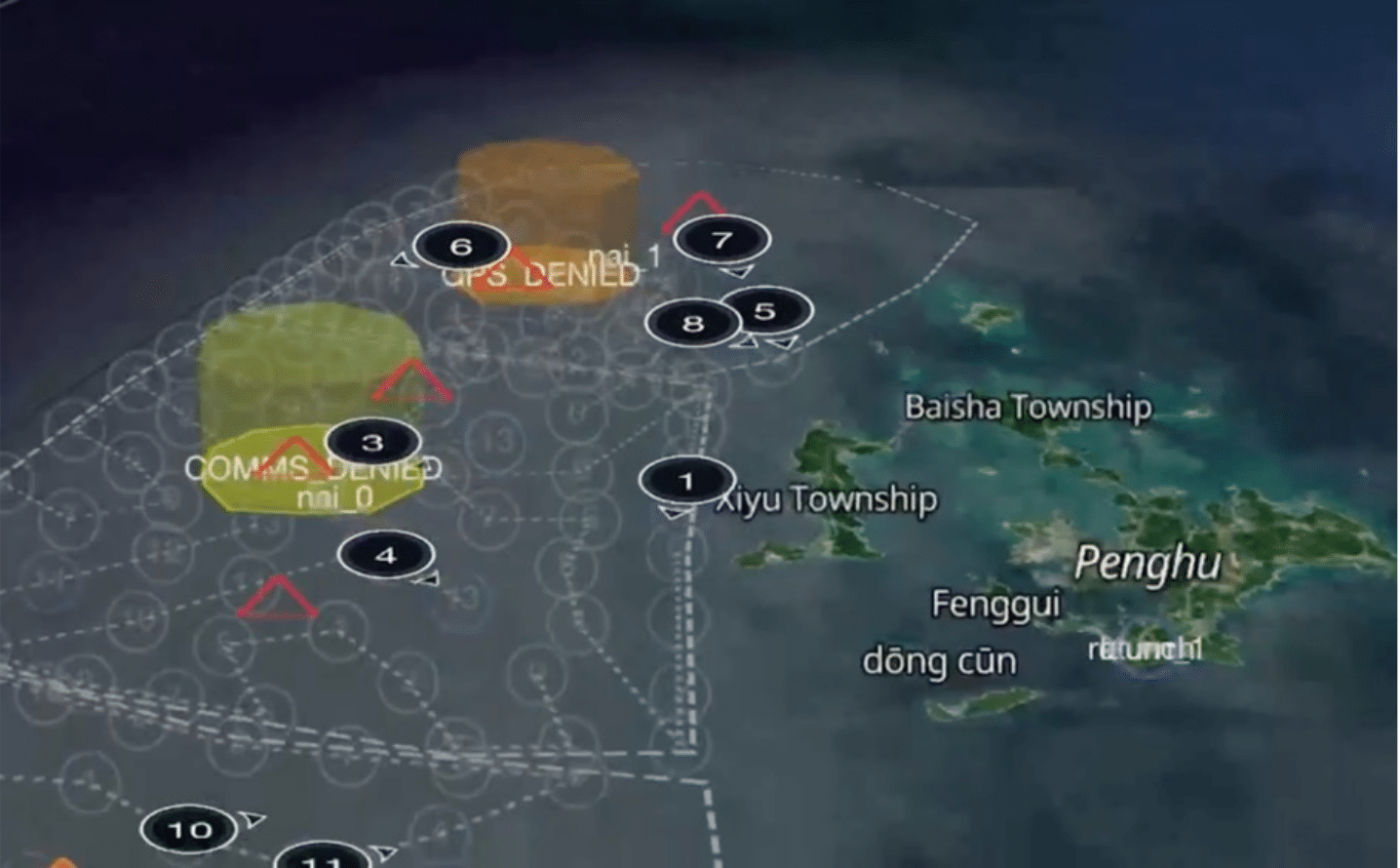

In addition to the GPS-denied problem, the lack of communications limit user interaction with V-BAT as well as inter-agent interactions. To allow a V-BAT to operate effectively without communications, on-board autonomy tracks the intended mission of the user and continues without direct human intervention even when unexpected situations arise. The autonomy framework allows the V-BAT to return safely to comms ranges when needed. In teaming scenarios, V-BATs communicate opportunistically to share their world models and therefore allow each to plan the overall mission with respect to other agents’ plans, even without constant comms.

Q: Now that we know how a single V-BAT agent works, how does this functionality and its corresponding challenges translate to or extend to V-BAT teams?

Note to the reader: Our previous blog, The Challenges of Robotic Perception – Shield AI, provides further background information on the challenges that robots face with group communication.

A: Nothing about the autonomy stack intrinsically changes between single-agent and team operations, but the performance requirements and complexity increase, since the team must function as a unit that is more efficient than a single agent.

To accomplish this, the elements of the autonomy stack are augmented to improve reliability and scalability. For example, in a team, the state estimation stack keeps tabs on the states of other V-BAT agents along with its own, establishing a common reference frame for collaborative decision-making. The global map is also augmented with shared information from multiple agents, painting a more complete picture of the environment. The planning stack allows multiple agents to leverage the joint environment representation to dynamically generate a set of tasks, behaviors, and actions aligned under the same strategy to ensure safety as well as efficient mission execution.

Augmentation leads to increased levels of abstraction in action, resulting in improved accuracy because human operators can focus more deeply on the fewer tasks under their purview, which will become more tactical and strategic in nature as autonomy becomes more capable.

A “multi-agent executive manager,” or MAEM, can be made responsible for allocation of tasks across multiple agents. For example, if there are n tasks and m agents, MAEM would decide what subset of tasks to assign to which agent. Generally, MAEM gets details of the mission (strategy) directly from the operator and breaks this down into tasks for individual agents. Even in a team though, all V-BATs follow the same strategy; the MAEM allows them to execute different behaviors in pursuit of fulfilling that overarching strategy. It’s like a syndication of the planning stack in a single V-BAT agent.

In a GPS-denied environment, the V-BATs would rely on one V-BAT to come up with a frame reference that the rest would use to state-estimate. One V-BAT’s estimation doesn’t make sense to the other V-BATs at first, because they use different reference frames. Transformation occurs to reconcile the two, and then the estimations make sense on a team-level.

Q: What is Shield AI doing to address the challenges of teams operating in comms-denied environments?

A: At Shield AI, we are tackling the challenges of comms-denied environments by engineering our autonomy software to function effectively even when communication is inconsistent. We prepare our V-BAT Teams with a strong foundational understanding of their tasks and roles, enabling them to operate cohesively and adaptively despite communication disruptions. Our V-BAT agents are designed to maintain communication with at least one other agent, ensuring continuous mission progress.

We’ve developed teaming behaviors that allow the agents to make informed decisions independently, based on the known objectives of the team. This ability to “fill in the gaps” ensures that operations can continue smoothly even when direct communication is not possible.

Drawing from my graduate research at Carnegie Mellon University, we’ve also adapted techniques to transmit significant amounts of data in compressed forms, which is crucial in low bandwidth situations. We’ve already successfully applied these principles to our Nova 2 quadcopter, allowing teams to communicate and relay information to ground stations efficiently, even with limited resources.

Shobhit joined Shield AI in 2017 and currently leads the Perception group that is focused on observing and understanding the environment and localizing agents within it. Prior to Shield AI, Shobhit completed his Master of Science in Robotics at Carnegie Mellon University, where his research focused on compact efficient map representations for swarming applications.