[November 20, 2024]

The Critical Role of Perception in Autonomous Systems

As defense and aerospace industries increasingly adopt autonomous systems, the demand for advanced, next-level autonomous solutions—such as adaptive, cognitive, and self-learning capabilities—continues to grow. These systems unlock critical tactical and operational advantages.

At the heart of such autonomy lies a robust perception stack, which enables on-the-edge decision-making by seamlessly integrating sensors, algorithms, and data processing to gather, interpret, and respond to the environment in real time. This capability is foundational for intelligent autonomy and resilience, empowering autonomous platforms to operate safely and effectively in denied, degraded, intermittent, or low-bandwidth (DDIL) environments where GNSS and communications are unavailable.

Shield AI’s Hivemind Pilot exemplifies industry-leading autonomy, powered by a state-of-the-art perception stack that integrates sensing, state estimation, object detection and identification, tracking, and real-time mapping. Together, these elements support high-level decision-making in shifting and unpredictable environments when offboard or human-in-the-loop processing is not feasible.

Hivemind Pilot

Traditional unmanned aircraft systems (UAS) rely on direct control, with a human-in-the-loop responsible for perceiving the environment, reasoning, and directing the aircraft to achieve specific tasks. However, as mission demands intensify and operating environments grow more complex, the demand for autonomous systems capable of rapid, dynamic decision-making rises.

Shield AI’s Hivemind Pilot meets these challenges with a robust perception stack designed to support autonomous decision-making. The perception stack constructs an actionable representation of the environment, forming the foundation for cognition and action. Key elements of the perception stack include:

Sensing: Manages sensor interfaces and resource prioritization to ensure availability for autonomous decision-making

State Estimation: Fuses sensor data to provide accurate estimates of position, velocity, and orientation, enabling precise navigation, object tracking, and mission planning

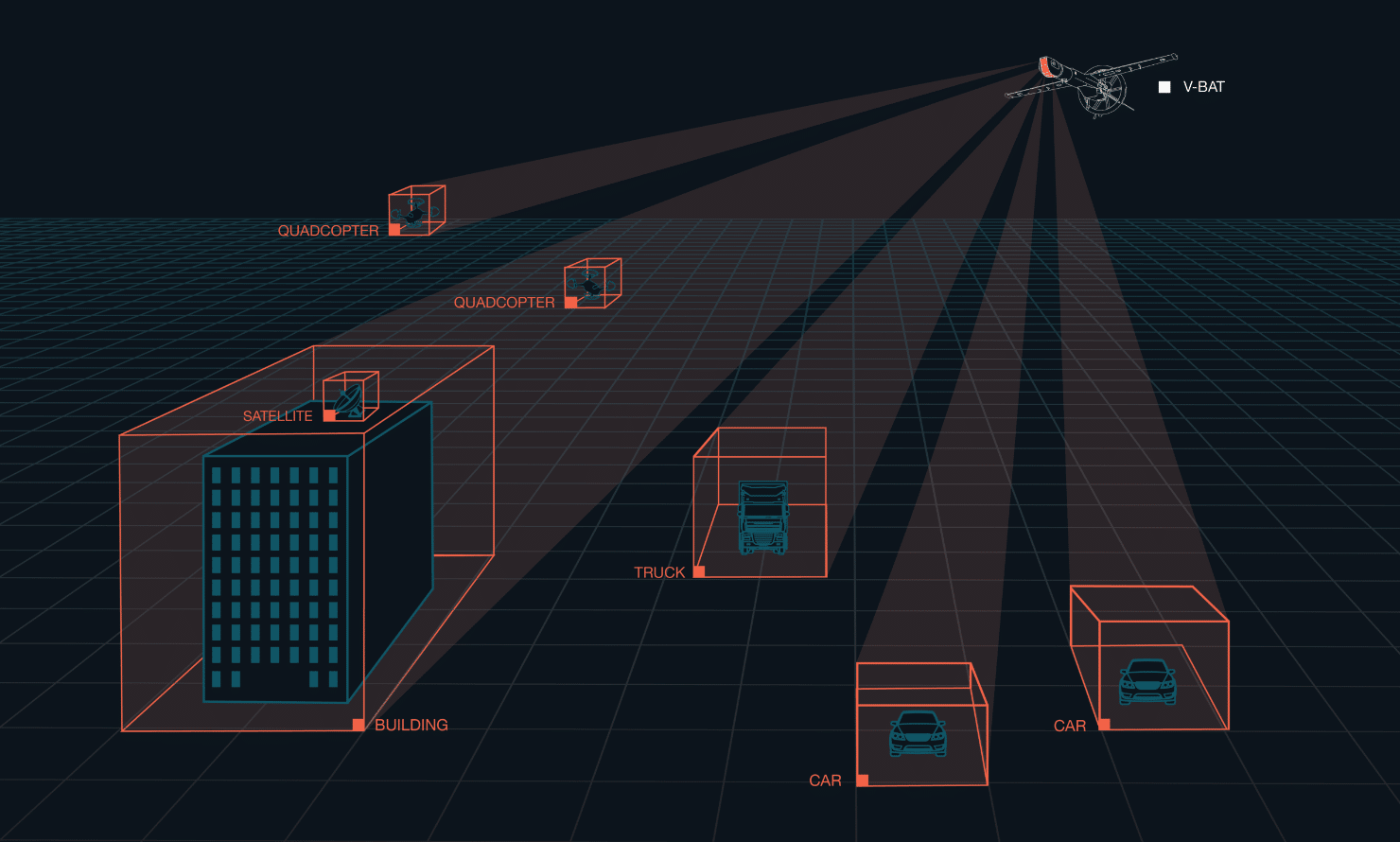

Object Detection & Identification: Autonomous detection and recognition algorithms are used to detect the existence of an object and in some cases classify the type of object

Object Tracking: Integrates data over time from multiple sensors into unified (e.g. correlation, fusion) tracks to improve the system’s understanding of its surroundings

Mapping: Combines information from the other perceptions modules into a unified set of map views that represent the understanding of the environment, enabling effective decision making and execution

Together, these components enable Hivemind Pilot to deliver accurate state estimation, object detection, and real-time mapping, bolstering mission safety (e.g., collision avoidance and path planning). Additionally, when multiple UAS are equipped with Hivemind Pilot, the agents can collaborate to build a distributed global map, enhancing teamwork among agents.

While this blog focuses on the perception stack, it’s essential to consider how it integrates with Hivemind Pilot’s cognition and action stacks. The cognition stack interprets the system’s state, environment, and mission objectives to generate an actional plan. The action stack then executes this plan, controlling the platform’s movements and managing payloads. Together, these components form a cohesive, real-time autonomous system capable of addressing complex mission objectives.

Hivemind Pilot’s Perception Interactions

Perception Stack

The following sections explore how each perception component—Sensing, State Estimation, Object Detection & Identification, Object Tracking, and Mapping—contributes to the overall situational awareness of an autonomous platform.

Sensing is a foundational component of the perception stack, acting as the platform’s eyes and ears. The sensor manager plays a pivotal role in overseeing and orchestrating the platform’s various sensors, defining standardized output messages for commonly used sensor types. These standardized outputs enable smooth integration with downstream autonomy blocks, ensuring each sensor’s data can be effectively utilized. The perception stack ingests data from a variety of sensors, each tailored to provide unique insights into the environment. Common examples of sensors include Electro-Optical (EO) / Infrared (IR) Camera, Radar, Signals Intelligence (SIGINT), Global Navigation Satellite System (GNSS), Inertial Measurement Unit (IMU), Automatic Identification System (AIS), Identification Friend or Foe (IFF), etc.

The sensor manager is crucial to Hivemind Pilot’s perception stack, providing the essential inputs for safe and effective autonomous operation across complex and unpredictable environments. Designed with extensibility, it supports seamless integration with various sensor types used across Group 1 to Group 5 UAS platforms.

The State Estimation module provides accurate and refined estimates of position, attitude, velocity, and acceleration for control and navigation. At the core of Hivemind Pilot’s state estimator is a Factor Graph Optimizer (FGO), originally developed for Shield AI’s Nova 2 Group 1 UAS. This FGO-based approach goes beyond traditional Kalman Filters by offering the flexibility to handle diverse sensor measurements and complex, nonlinear constraints, making it well-suited for tasks like Simultaneous Localization and Mapping (SLAM) and advanced sensor fusion.

The FGO supports both batch and incremental optimization, making it effective for large-scale tasks such as loop-closing and the reprocessing of past data to improve positional accuracy—capabilities challenging for Kalman filters. By continually aggregating and refining data from multiple sensors, the state estimator maintains georeferenced positional accuracy, allowing the platform to recognize potential hazards, adjust its trajectory, and modify operational parameters in real time.

Shield AI’s Hivemind Pilot’s state estimator is explicitly designed to perform in denied, degraded, intermittent, or low-bandwidth (DDIL) environments where GNSS and stable communications are unavailable. This component ensures the autonomous platform can reliably maintain its situational awareness and adapt to complex and unpredictable conditions, even in the most challenging environments.

The Object Detection & Identification and Object Tracking blocks work together within the perception stack, enabling autonomous systems to detect, identify, and track static and moving objects in real time, drawing on data from various sensors. Together, these modules operate a detect, identify, locate, and report (DILR) cycle, delivering real-time environmental awareness.

The Object Detection & Identification block employs machine learning algorithms for search characteristics, feature extraction, etc., that underpin autonomous detection and recognition models to identify mission-relevant objects with discernment and confidence metrics. Hivemind Pilot supports the integration of detection and recognition algorithms to include Shield AI’s Sentient Tracker as well as third-party solutions (Dead Center, Maven Smart, etc.) for greater end-user flexibility.

The Object Tracker module correlates and fuses sensor data to create accurate, unified tracks, offering a coherent understanding of the operational environment. This data informs prioritized responses based on identified objects, allowing Hivemind Pilot to distinguish between hazards and mission-critical assets.

Example of Shield AI’s Sentient Tracker Detecting Moving Targets

The final component of the perception stack is Mapping, which consolidates inputs from sensors, perception blocks, and radio/data-link systems to create and produce detailed, georeferenced maps. These maps provide real-time information on dynamic terrain, obstacles, boundaries, and other environmental features, enabling situational awareness in complex environments. The Mapping block also contains the Geozone Server to manage three-dimensional mission-configurable data such as airspace control measures (ACM), fire support coordination measures (FSCM), air defense measures (desired engagement zones, etc.), and civil air traffic control (ATC) airspace.

Integrated with State Estimation, the Mapping block ensures real-time localization, providing precise positional data critical for safe and effective navigation in GNSS-contested environments. In this block, Hivemind Pilot’s Distributed Global Map (DGM) produces a comprehensive representation of the environment that—when used with autonomous teams—is synchronized across all agents, ensuring well-reasoned coordinated operations in complex environments. This integrated approach allows agents to make informed decisions, adjust to dynamic conditions, and collaborate effectively within a team.

The Importance of Perception

Effective perception provides the foundation for all subsequent decision-making, enabling autonomous systems to receive, process, and interpret environmental data in real time. In frameworks like the OODA loop (Observe, Orient, Decide, Act), timely, accurate observation is critical for driving faster and effective orientation, decision, and action. Similarly, in the PCA model (Perception, Cognition, Action), perception plays a pivotal role in contextualizing data, serving as the critical first step that shapes all subsequent actions.

Human-centric OODA loop and the autonomy-centric PCA loop

Without on the edge perception, the process falters and instead requires continual task-saturating operator-in-the-loop intervention. This approach is slow, limits scalability, and hinders the application of more advanced adaptive and cognitive autonomy. With a human operator having to cover both perception and cognitive functions, mission effectiveness becomes dependent on communication links, creating critical vulnerabilities in DDIL environments where links are unreliable or absent.

Poorly designed perception stacks can also be problematic. Incomplete or inaccurate perceptions introduce errors into the cognition stack, leading to flawed situational awareness, unreliable decision-making, and compromised autonomy. Such weaknesses may be masked with basic assisted autonomy but is magnified when scaling to advanced autonomy required for mission success in complex environments.

A robust perception stack is, therefore, foundational to the safety, reliability, suitability, and effectiveness of autonomous systems. First, this enables systems to navigate a range of dynamic and unpredictable environments, managing obstacles and threats independently. Second, autonomous systems equipped with strong perception capabilities operate seamlessly even in GNSS and/or communication compromised environments.

Finally, with autonomy managing and overseeing individual platform performances, the operator can manage higher-level integration, battle management, and theater coordination. The operator remains on-the-loop providing oversight and direction when necessary. This balance ensures resilience while enabling machine-speed decisions and scalability for tactical advantage.

Conclusion

Hivemind Pilot is designed for the portability, adaptability, modularity, and scalability required to support advanced autonomy, allowing customers to meet present and future operational challenges with confidence. A strong perception stack deployed at the edge is critical to achieving the advanced capabilities and operational effectiveness demanded by autonomous systems. Shield AI’s Hivemind Pilot and its robust perception stack provide cutting-edge solutions for autonomous systems operating in dynamic and DDIL environments.

About the Author

Willy Logan is a seasoned aerospace engineer and Senior Director of Engineering at Shield AI, where he leads a team of Chief Engineers in integrating Shield AI’s autonomous AI Pilot (Hivemind) across Group 1-5 uncrewed aircraft. With a strong track record in both DoD Programs of Record and fast-paced R&D, Willy brings extensive experience in delivering complex hardware and software solutions. Before joining Shield AI, Willy spent over 12 years at General Atomics Aeronautical Systems (GA-ASI), where he held roles including Deputy Director of Systems Engineering for the MQ-9B UK Protector and Chief Engineer for mission systems development. Willy holds a B.S. and M.S. in Mechanical and Aeronautical Engineering from UC Davis. A San Diego local, he enjoys surfing and exploring the city’s best taco spots with his family.