[August 21, 2018]

What Happens When Robots Work Together

You have dedicated your career to researching collective intelligence. How do you think about teams of robots working together? Where does that begin and how does it work?

We start with a single robot. We move to small teams of robots working together. And at the same time, we develop the frameworks that are required to enable concurrent collective intelligence. Through transfer learning, through synthetic experience generation, through real world experience, and cross-validation, we are developing the core technologies that make it possible for robots to work together seamlessly. These are the technologies that allow a single robot to share information with other robots, to share information with people, to learn and understand what the human intent is as they work with the system.

As we build these technologies in, and build up these foundations, we are capable of thinking about more and more robots working together. And the more robots you pursue, the more flexibility you have in how you think about those robots coordinating. This is when you get into ideas relating to teaming, tasking, and online redistribution of resourcing and loading of the system. This is where you start to build in learning with respect to meta behaviors across teaming and tasking.

So we proceed from one robot to many with behaviors that become increasingly sophisticated. We at Shield AI have the advantage of being able to pursue this objective much more rapidly because we can lean on the foundations which have been developed extensively over the last several decades in academia.

Can you tell me how you thought about robots working together several years back following the nuclear accident with the three Fukushima Daiichi reactors?

For Fukushima, we considered just two robots, a ground and an aerial robot, in response to the nuclear reactor explosion. The effort was in collaboration with colleagues in Japan that developed the ground robot while we were responsible for the aerial robot. The idea was we’d take a ground and aerial robot and explore a building that was inaccessible to both people and robots given the situation. A person would remotely guide the ground robot into the building and that ground robot would have a tether on it. The aerial robot would then take off from that ground robot at specific locations of interest, fly around the building and develop a model of the environment so that the remote operator and individuals working with them could have an idea of what happened in that environment. At the same time that the aerial robot flew around and built a model of the environment, it would build a map of radiation levels, temperature and humidity levels, among other sensor observations of interest. As the robot’s battery reduced, the robot would plan and consider its ability to go back and land on the ground robot. After recharging, the aerial robot would be taken to another location and take off again and continue the exploration.

The idea here was that the two robots would work together to overcome each other’s limitations. The aerial robot would be powered by the ground robot and communicate with it, and the ground robot would leverage its ability to pull a tether around in order to transfer that power and enable remote communication. One of the biggest challenges here was that we had to build autonomy both for the ground and the aerial robot. The ground robot could be a little less autonomous because a human was operating the system remotely; it still had a lot of capabilities, but it didn’t have to think for itself. The aerial robot, however, would have to think for itself. It would have to build a map of the environment, take off, fly around this rich, 3-D environment that’s quite cluttered with lots of variable wind flow conditions, wires hanging down and obstacles in abundance. Then as it would build that map, the aerial robot would have to explore the environment and simultaneously take into account what it was seeing and modeling. It would combine all the sensor observations into a common map of the environment, and was constantly asking the question, “Can I go back and land on the ground robot?” When it returned to the ground robot, it would say, ‘this is the world as I see it,’ the ground robot would say something similar, then the aerial robot would compare and compute the relevant transforms between the two of them and establish a common local map so that they could plan together.

And on the topic of the high-consequence domain in which you now work, how do you think about Shield AI and multi-robot collaboration?

Collective intelligence is going to be very powerful within this domain. As people work together to achieve even greater objectives than a single person could, collective intelligence and coordinated, multi robot systems will do the same — multiple robots can work together to achieve things which each could not achieve on its own.

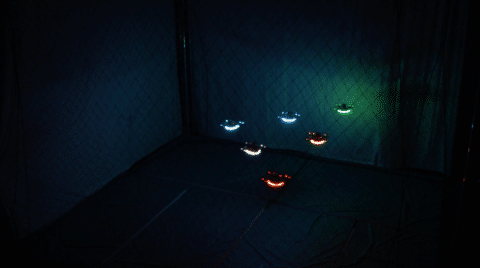

[Corah and Michael, RSS, 2017]

In multi-robot coordination and collective intelligence, we talk about this idea of super linear contribution. The idea behind it is that if you and I are trying to achieve a goal; let’s say we want to mow a lawn, we could start mowing and it would take an hour. If we work together, it would take us 30 minutes. If four of us work together, it would require maybe 15 minutes total. This is just linear contribution, meaning the job itself is something either a single person or many people could do, and it simply gets faster as there are more people working together. But within the context of autonomous systems, by leveraging collective intelligence, by leveraging coordination and the robots’ ability to work together, you can begin to see significant outcomes that are what we call “super linear contribution.” This means that now the robots can do things together that would simply be infeasible otherwise. A great example is working together to explore an environment and make decisions in order to achieve an accurate representation of that environment while at the same time avoiding missing any areas.

There are many examples of situations in which coordination and collective intelligence can dramatically increase the capacity of the system. Within the intelligence context, having a single robot able to learn and share its knowledge with other robots and having those robots share their knowledge with each other means that as one robot learns, they all learn. You are concurrently learning and increasing knowledge exponentially across the system. That is a huge enabler, because now we can exploit this distributed intelligence to really scale the capacity of how these systems can think and work together quickly.