[August 14, 2018]

What is the Role of Trust in Robotics?

How did you approach the experience of building GAMS/MADARA?

I came from a middleware group called the Distributed Object Computing group at Vanderbilt. My training was primarily in middleware, but I had a real passion for AI. I wanted to influence how we develop distributed AI, and how we represent and command a swarm of robots that can help people be more efficient, more effective, and safer. I approached building middleware for AI from the idea that distributed AI, especially, is a serious problem that we need to solve, and we need to have good tools that we can trust. This sense of impending necessity informed how GAMS and MADARA was built. The middleware layers were developed to help establish trust and focus on the process of responsive software that will operate correctly in a high-performance, safe, predictable, and effective manner.

Why is it important that robots work together?

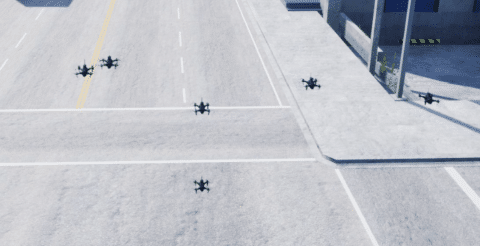

There are certain robotics problems that can be solved by one agent diligently working on an activity, but these kinds of problems are a really small subset of what is possible. Imagine a single person trying to dig the Panama Canal or build a skyscraper. If we try to do everything by ourselves, projects are more likely to fail, take longer, and be of lower quality than if we bring in the right people with the right specializations and have them all work together. Robotics moves in the same direction. By making specialized robots that work well together, we can do more, and we can do more quickly, more effectively, safer, and with higher quality.

The dangers of collaborative robotics are really in human perception and interaction with these systems. There’s a certain narrative in science fiction that has been going on for decades, and it’s really difficult to unwind that uncertainty and fear that comes along with people being in an environment where metal robots are working together. There’s a lack of trust that we have to help eradicate through verification, validation, education, and good software engineering practices. Successful collaboration between distributed robotics and human operators is going to require focused effort in clarifying robot intent and actions and increasing trust between humans and multi-agent systems. If we can do that, we’re going to see an exponential increase in human productivity, efficiency, effectiveness, and safety.

What is the hardest problem you’ve ever tackled?

For me, and I think for any software engineer, if there is a technical problem that I’ve already solved, then it doesn’t feel like a particularly hard problem in retrospect. If it were difficult, then it would still be remaining.

So, for me, it’s really about the future, and the focus areas of Scalable Infrastructure are really exciting to me because we have to take such an interdisciplinary approach to solving really hard human-interaction and collaborative intelligence problems. There are a lot of technical problems in scalability, correctness, safety, and security. There are also a lot of problems in collaboration with large scale deployments of robotics.

How do we prove correctness of a full robotic system acting by itself in a mission-critical, unstructured environment with asynchronous events? How do we prove the correctness and safety of two robots working together? Ten robots? 100 robots? 1,000 robots? How can we telegraph our intent to humans as an individual robot or as a group of robots to establish trust? How can we protect people and make them more efficient and effective in high cognitive load situations like a firefighter running into a burning building or a group of soldiers under fire during an ambush? How do we process data from 1,000 robots, learn from our mistakes, and make better deployments of hundreds and thousands of robots for future use? How do we make the human interaction and experience of command-and-control, analysis, and knowledge transfer to other robots and human operators as transparent, seamless, effective, and efficient as possible? How do we not overwhelm people with too much information?

There are so many really fun, hard problems to solve in Scalable Infrastructure. The best is yet to come!

What was it that attracted you to work here?

Chief Science Officer Nathan Michael and I had been looking for ways to work together over the past few years while we were both at Carnegie Mellon University. We had done a proposal with Senior Principal Scientist Ali Momeni to present viable solutions to the human-robot collaboration problem with swarms, and we really believed in what we were proposing. I think we were all attracted to the company because we really love to work on hard problems that will have major impact on humanity and the future of artificial intelligence.

Now that the three of us are here at Shield AI, it’s no longer just about working with Nathan — who is a brilliant researcher and person — but there is also this really remarkable, diverse set of people with specialized and very useful skills, unique personalities, and interesting backgrounds. Everyone shares a vision of what the next generation of robotics should be. I think we all see the potential of what we’re working on and a future where people are safer and more productive with AI. There’s a buzz, an excitement, and also a humility here which is unique and really important to the culture and direction of the company. These traits help shape who we are and where we’re going, and I’m excited to see what we will create in the next five to ten years.