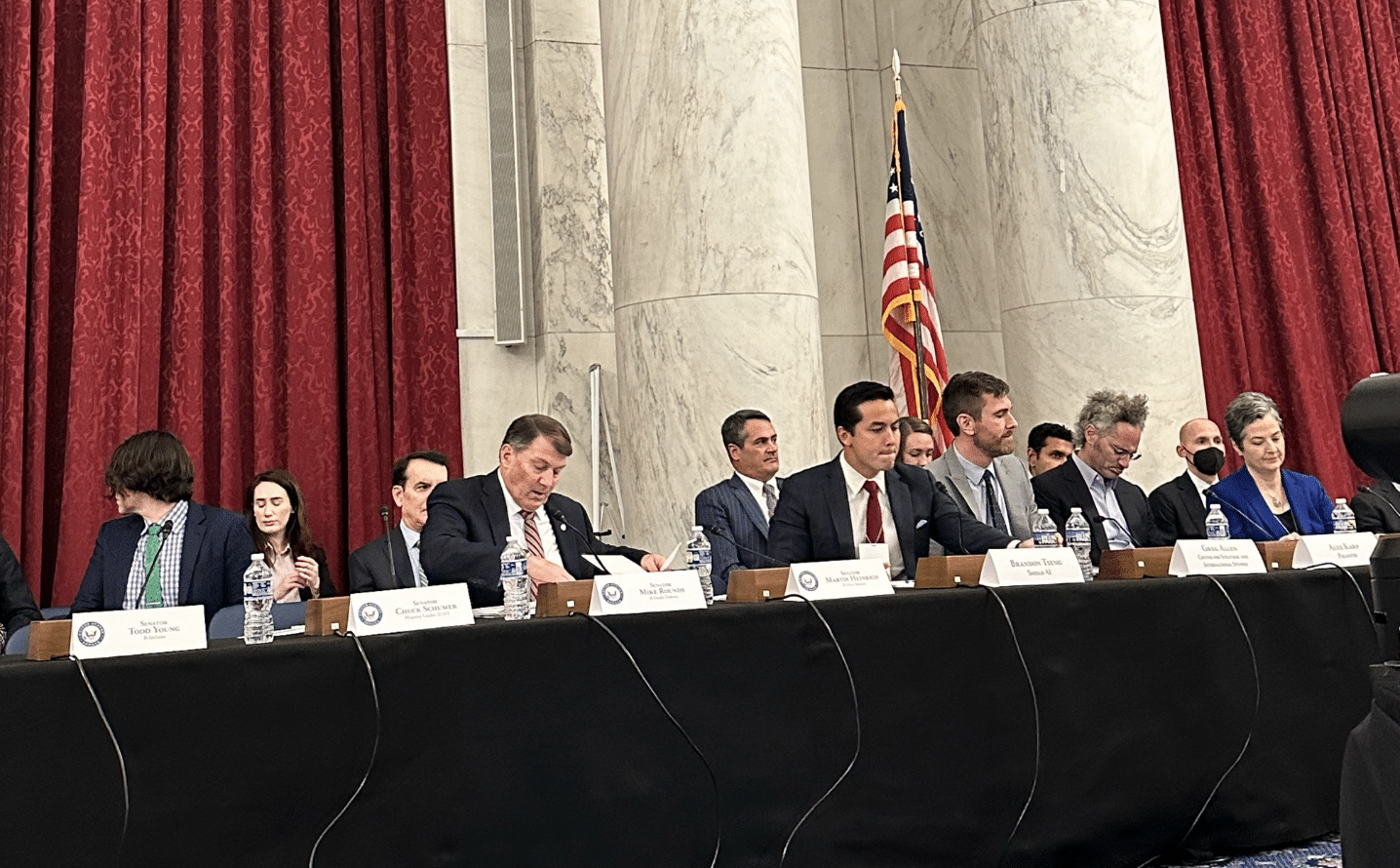

Written Statement:

Brandon Tseng, President and Co-Founder

Shield AI

December 6, 2023

Majority Leader Schumer and Senator Rounds, Senator Heinrich, and Senator Young:

Thank you for hosting these AI Insight Forums, particularly the one today focusing on National Security. The legislative work you’re doing is vital, and we appreciate your swift action and consideration. As you work with your colleagues to craft meaningful legislation, I suggest you include appropriate regulatory waivers for AI used for national security, provide stable funding with contracting reform, support military leaders working to better innovation at the Department of Defense (DoD), and help the public to validate and trust new technologies.

My name is Brandon Tseng. I am the President and Cofounder of Shield AI, one of two multibillion-dollar defense-tech companies founded in the past 20 years. I’m also an engineer and a former Navy SEAL with deployments to the Arabian Gulf, the Pacific Theater, and twice to Afghanistan. Shield AI’s mission is to protect service members and civilians with AI systems. At Shield AI we are building the world’s best AI pilot, which is self-driving autonomy technology for aircraft. We are the only company in the world that has deployed aircraft that can operate without GPS, communications, or remote pilots and still execute a mission. We have more flight hours flying actual F-16s completely autonomously in fighter combat scenarios than any company in the world, and we are the only company to have launched a swarming product on a military designated aircraft, the MQ-35A V-BAT.

For Shield AI and in the context of national security, we believe AI piloted systems will be the greatest military deterrent of our generation. We must get it right. We also must understand the magnitude of its ability to transform our nation’s fighting force for the better, if we allow its development to flourish. A brief analogy: when internet adoption began, many people misunderstood what the internet was. People thought the internet was about email, checking stocks, and MapQuest, when in reality, those were simply products that leveraged the internet. As we appreciate today, the internet is about connecting every individual on the planet to information, businesses, and each other.

AI as a technology is on a similar journey. People have thought AI is about recognizing pictures or chatbot assistants like ChatGPT. Those are simply products that leverage AI. AI is really about systems that can perceive, think, and act on the world around them, and then learn from these experiences. Like the internet, the products derived from AI will change the world. From a national security perspective, this iteration of AI pilots both excites and concerns me.

It excites me because Shield AI has spent nine years and hundreds of millions of dollars of our own money to develop our AI pilot, which is flying F-16s in combat scenarios, flying teams of MQ-35As, and is deploying on quadcopters and saving the lives of American and allied servicemembers who are fighting on the ground in combat. We know for a fact that our AI pilot makes a life-or-death difference in war, and we know that when safeguarded by the ethical framework codified by the DoD on lethal autonomous weapon systems, AI pilots can achieve, at scale, the deterrent effects not seen since the emergence of our nuclear capability.

This iteration of AI concerns me because our near-peer adversaries, the most notable, of course, being China, are running fast with the development of AI capabilities for national security purposes. President Xi has made the pledge to, “Achieve world-leading levels” in all AI fields by 2030 and has directed China’s military leadership to create a “highly informatized” force – focusing on “the integral components” of AI and electronic warfare. China’s military leadership believes that “AI-enabled warfare represents a military technology revolution on par with the mechanization and informization revolutions of the 20th century.” Indeed, Chinese media has benchmarked Chinese AI pilot developments against Shield AI. We are in the enviable position where we can see the enemy develop eye-to-eye with our own advancements; why would we not take the chance to outrun exponentially the threat we see in front of us?

With respect to national security, I believe we can win the AI race, but it will be a very hard race to win and requires a unity of effort and a sense of urgency from industry and government. Like the race to build the atomic bomb, we can’t afford to lose the AI race. As a country, we needed to be speeding to ramp yesterday – and not spending our resources on capabilities that don’t deter or, if necessary, help win a war against a peer adversary. We need to focus our attention on spending the time, energy, and money on AI pilots that can read, react, and amplify our capabilities “on the edge”, on the battlefield. Anything less than this means we fail to deter our enemies (who have no compunctions on putting guardrails up around their AI).

The DoD is further along than any other agency when it comes to guidance around developing AI. It has provided direction for ethical principles, data policies, privacy, weapons engagement, and beyond. DoD’s policy on AI and autonomy in weapons systems is covered extensively in DoD Directive 3000.09, which requires that all systems, including lethal autonomous weapon systems, be designed to “allow commanders and operators to exercise appropriate levels of human judgment over the use of force.” For any company selling to the DoD, it is an expectation that we comply with these regulations, and for companies whose entire mission is to bolster national security, like Shield AI, we do not see any other option and we operate and build accordingly.

Working with the DoD on incorporating AI pilots into the force structure has been difficult and murky. There are still many blockers standing in the way of getting service members what they need to be successful in the future fight. Top of mind for me: the DoD claims to be spending $4 billion on AI, yet I observe almost no programs of record that are built on AI and focused on deterring adversaries, leading me to question where this $4 billion is being allocated. As we observe new types of warfare, where the large exceptional military arsenals we have built can be incapacitated by small, cheaply built adversary armaments, we need the DoD to change the way it builds its force of the future: switching the paradigm away from what worked in the past and focusing its resources on the next game-changing technological assets. Adopt AI pilots too slow, and we will fail. Bold actions are required if we are to win.

What Congress Can Do

Congress has a major role in meeting the challenges in AI for national security, and your interest in listening to industry perspectives today energizes technologists, like me, who have devoted our lives to deploying this technology for good. My recommendations are as follows:

- While the DoD has implemented a variety of policies and guidance for uses of AI, and there will be more, it is vital that Congress helps redirect missteps, without slowing the progress of good policies. The recently released Executive Order on Artificial Intelligence included some exemptions of its application when technology is used for national security. This is a wise step and Congress should include similar considerations as it writes policy that must effectively distinguish between consumer-facing uses of AI and those specifically built for military applications, while not mitigating the necessity to build trust and verification of the technology.

- Industry needs Congress to help the DoD move quickly and effectively. There are the obvious needs of stable funding for innovation adoption and procurement, but those will be hindered without a shift in thinking. Most DoD programs today fund hardware and then include the purchase of software as an afterthought, or not at all. Autonomy software needs to be budgeted and contracted from the beginning of program inception – just as an engine or the onboard computer is budgeted and purchased.

- Support and encourage military leaders as they try to make changes away from bloated, expensive force structures. We need a system that rewards people for being creative to meet new challenges while being responsible stewards of the public trust.

- Validating autonomous technology and creating trust by military users and the general public is paramount, particularly when utilizing an AI pilot for kinetic strike. I cannot stress enough that this must occur with the utmost certainty and precision. The DoD operates under that assumption as well, and industry takes it just as seriously. The weaponization of autonomous systems (and automation of weapons systems) is inevitable. Indeed, it’s a historical fact: the Tomahawk Anti-Ship Missile (TASM), fielded in the 1980s and capable of searching for and engaging Soviet vessels, was arguably the first operational fully autonomous weapon. At first, people were skeptical of how it would reach its target once fired. Now, it’s a generally trusted technology. Autonomous software will go through the same iterative process and Congress needs to be leaders in the public conversation for where this process must lead, while allowing the DoD and industry to perform with the full force of our capability.

At Shield AI, we believe that AI pilots are a deterrent, and the greatest victory requires no war. AI will be the centerpiece of deterrence for decades to come if properly funded and at a pace ahead of our adversaries. Our company works tirelessly to support our warfighters.

Recently I’ve become the proud father of two young children, and I often get asked the question: Would you support your son or daughter if they want to serve our country in the armed services? My answer is an unequivocal yes, but with the understanding that America and our elected leaders do everything in their power to ensure life-or-death conflict against America’s warfighters forever remains the most technologically lopsided contest for our adversaries. Thank you for inviting me to participate today. I look forward to the discussion.