Over your career, what have you witnessed with respect to the evolution of state estimation?

State estimation systems have become smaller, more ubiquitous, and have integrated new sensors. Much of this was driven by GPS. Before GPS, state estimation systems were often built around large, expensive sensors, and found application in large, expensive vehicles, such as aircraft and spacecraft. GPS provided a small and cheap system which enabled localization almost anywhere on the planet. This opened the door for use in new markets such as automobiles, personal navigation, and robotics. Along with proliferation of small consumer electronics — namely smartphones — this has driven miniaturization of other sensor technologies as well, such as inertial measurement units (IMU) to estimate orientation in addition to position.

GPS does not solve every navigation problem, though. As new capabilities developed in new markets, it started to become apparent that while GPS had helped to enable these developments, it was also limiting them. For instance, GPS does not work well where there is no clear view of the sky, and GPS service can be denied by sophisticated signal blocking techniques. This has driven a surge in development of GPS-denied navigation research. The new research focuses on fusion of information from many information sources, including sensors such as cameras, which had not traditionally been widely applied in navigation.

In a high-growth environment such as Shield AI, how is state estimation thought of differently?

There are two primary differences. First, in an environment like Shield AI, on an entrepreneurial team working on a new product, every state estimation engineer works on every part of the problem end-to-end, from choosing which sensors will go on the product to implementing algorithms to flight testing. In a larger company, an engineer will often focus on one thing — a particular sensor or a particular algorithm — for a large part of their career.

Second, development at a company like Shield AI is extremely mission-driven. For instance, in a larger company, my objective might be to create a report describing a new algorithm that we are considering using. If I discover that there is a flight condition that the algorithm can’t handle, I’ll include a section in the report describing it. At Shield AI, if I discover an unhandled flight condition, I’ll collaborate with the state estimation team and we’ll dive into the problem immediately until we understand and solve it. This kind of personal investment in a mission that we all care about is very rewarding.

How is state estimation different in GPS-denied environments? How does this relate to Nova which is designed to operate indoors?

Navigation with GPS is well established. To be clear, the science and engineering behind GPS is very complicated and impressive, but building a navigation system using GPS is relatively straightforward. Integration with an inertial measurement unit (IMU) is generally required in order to estimate orientation, but this process is well understood. Since GPS measures position directly, as long as GPS signal is available, our position accuracy, which is usually a primary consideration when designing a state estimation system, will never be worse than the accuracy of GPS.

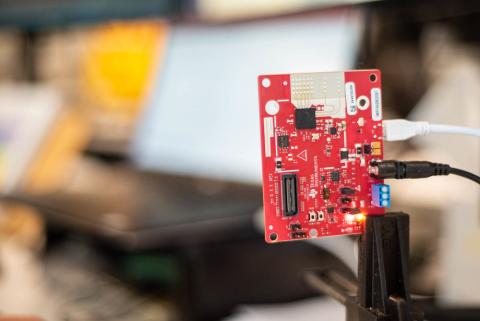

In GPS-denied environments, by contrast, we need to incorporate other sensors to help observe position. In some instances, this can be done by simply modifying the operational environment to include radio beacons or fiducials (visual reference points at known locations). Adding these beacons to the environment would enable us to measure position. However, Shield AI’s robots fly in previously unseen environments. Our robots thus have a suite of different sensors, including cameras, 3D cameras, and LIDARs, that are used to support navigation.

The processing required to extract useful information for navigation is different for each sensor, but is conceptually similar. We can understand the basic idea by considering visual navigation, which entails the use of cameras for state estimation. Imagine you are driving on the highway, looking out the window. If you didn’t already know what direction you were travelling, you could look through the window at the scenery flying by and figure it out. In visual navigation, we would do this by pointing a digital camera out the window to collect video while driving. We would then analyze two successive images captured by the video to observe how the scenery changed, which tells us something about how the camera has moved in the time between the two images. For instance, if you observed that the scenery moved slightly to the right, this implies that the camera — and therefore your vehicle — has moved to the left in the camera’s reference frame. This is the basic idea behind visual navigation.

Other variables also would need to be taken into account. In particular, the above example considered only translational motion — the motion by which an object shifts from one point in space to another. The same shift in scenery between the two images could also be created through rotational motion if the camera was sitting still and rotating to its left. Therefore, visual navigation algorithms must differentiate between rotational and translational motion and also handle challenges such as image blur due to motion and challenging lighting conditions.

If we look at each pair of successive images in our video, we can add up all of the motion estimates between them to get an estimate of the current position. However, each of these estimates has some small error. The more estimates we add (i.e. the longer the video), the larger the error gets. We refer to this concept as position estimation drift.